In my recent posts, I've been exploring various aspects of home automation, focusing on practical solutions that enhance everyday experiences. For instance, in Automating OPNSense Backups, I detailed how I set up automated backups for my OPNSense firewall, ensuring my network configurations are safe and easily manageable. Additionally, I transformed my home theater setup into a seamless experience using Home Assistant, allowing me to control multiple devices with a single button.

I've also tackled some challenges with integrating Minka Aire fans into my home automation system in How to make Bond fans work better with Home Assistant. By sharing these experiences, I hope to provide insights and inspiration for anyone looking to enhance their own home automation projects.

Avoiding Catastrophe by Automating OPNSense Backups

tl;dr: a Backups API exists for OPNSense. opnsense-autobackup uses it to make daily backups for you.

A few months ago I set up OPNSense on my home network, to act as a firewall and router. So far it's been great, with a ton of benefits over the eero mesh system I was replacing - static DHCP assignments, pretty local host names via Unbound DNS, greatly increased visibility and monitoring possibilities, and of course manifold security options.

However, it's also become a victim of its own success. It's now so central to the network that if it were to fail, most of the network would go down with it. The firewall rules, VLAN configurations, DNS setup, DHCP etc are all very useful and very endemic - if they go away most of my network services go down: internet access, home automation, NAS, cameras, more.

OPNSense lets you download a backup via the UI; sometimes I remember to do that before making a sketchy change, but I have once wiped out the box without a recent backup, and ended up spending several hours getting things back up again. That was before really embracing things like local DNS and static DHCP assignments, which I now have a bunch of automation and configuration reliant on.

Automating a Home Theater with Home Assistant

I built out a home theater in my house a couple of years ago, in what used to be a bedroom. From the moment it became functional, we started spending most evenings in there, and got into the rhythm of how to turn it all on:

- Turn on the receiver, make sure it's on the right channel

- Turn on the ceiling fan at the right setting

- Turn on the lights just right

- Turn on the projector, but not too soon or it will have issues

- Turn on the Apple TV, which we consume most of our content on

Not crazy difficult, but there is a little dance to perform here. Turning on the projector too soon will make it never be able to talk to the receiver for some reason (probably to prevent me from downloading a car), so it has to be delayed the right number of seconds otherwise you have to go through a lengthy power cycle to get it to work.

It also involves the location of no fewer than 5 remote controls, 3 of which use infrared. The receiver is hidden away in a closet, so you had to go in there to turn that on, remote control or no. Let's see if we can automate this so you can turn the whole thing on with a single button.

How to make Bond fans work better with Home Assistant

I have a bunch of these nice Minka Aire fans in my house:

They're nice to look at and, crucially, silent when running (so long as the screws are nice and tight). They also have some smart home capabilities using the Smart by Bond stack. This gives us a way to integrate our fans with things like Alexa, Google Home and, in my case, Home Assistant.

Connecting Bond with Home Assistant

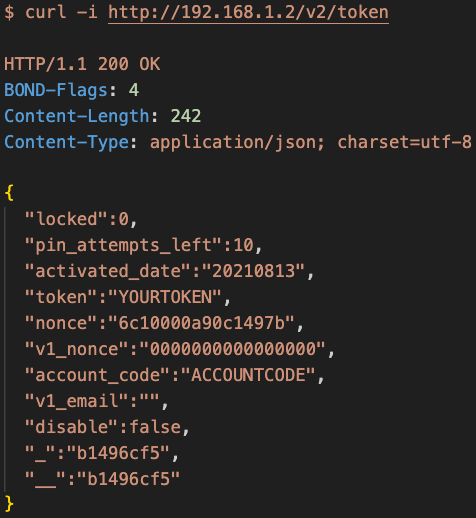

In order to connect anything to these fans you need a Bond WIFI bridge. This is going to act as the bridge between your fans and your network. Once you've got it set up and connected to your wifi network, you'll need to figure out what IP it is on. You can send a curl request to the device to get the Access Token that you will need to be able to add it to Home Assistant:

If you get an access denied error, it's probably because the Bond bridge needs a proof of ownership signal. The easiest way to do that is to just power off the bridge and power it on again - run the curl again within 10 minutes of the bridge coming up and you'll get your token.