bragdoc.ai - achievement tracking for software professionals

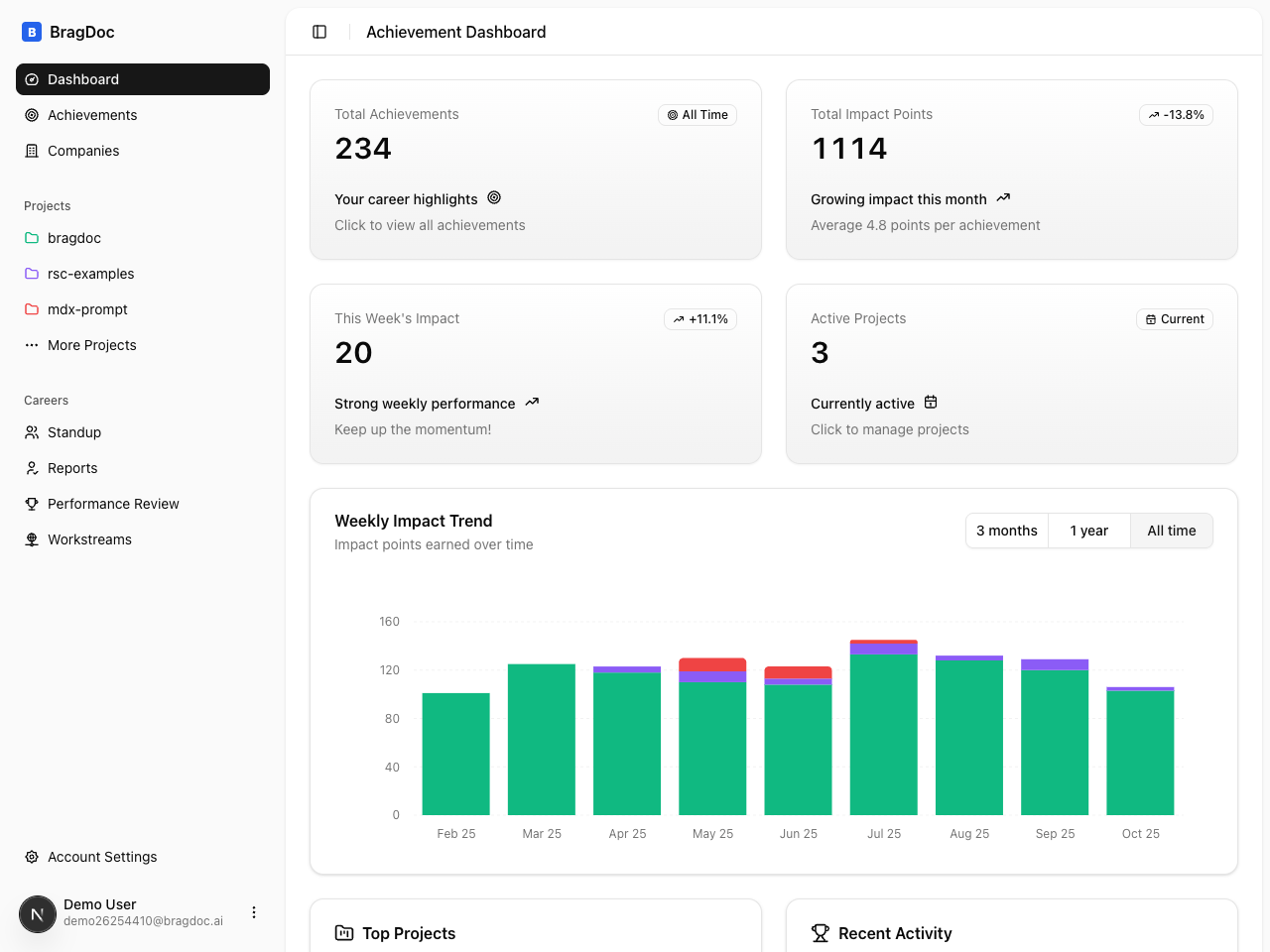

In January 2025 I released the first version of bragdoc.ai, a tool that uses AI to help engineers track their work and generate performance review documents. I built it to scratch my own itch - for years I'd kept an achievements.txt file that I'd inevitably forget to update.

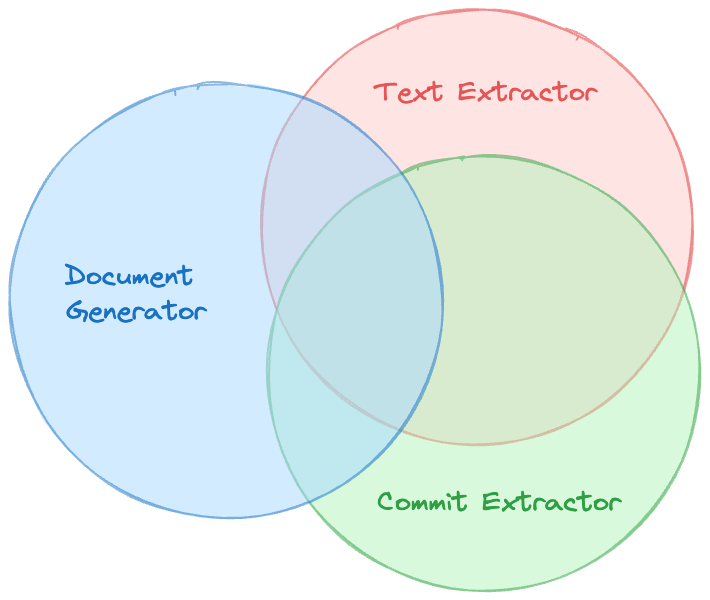

The technical challenge was interesting: how do you automatically extract meaningful accomplishments from git commits without sending proprietary code to third-party APIs? The solution involved building a CLI that processes git data locally, sending only the extracted achievements to the cloud. Users can choose OpenAI, Anthropic, or run everything locally with Ollama.

I open-sourced the entire codebase because I think it's a useful reference for building AI-powered SaaS apps with Next.js. The blog posts below cover the technical details - from the initial 3-week build using AI tooling, to the complete privacy-first rebuild 9 months later.

Recent blog posts about bragdoc.ai

All the posts below are about bragdoc.ai.

9 months after the original creation of bragdoc.ai, I've rebuilt it from the ground up with privacy-first architecture, configurable LLM providers, and a proper web UI. Here's what changed and why it matters for engineers tracking their work.

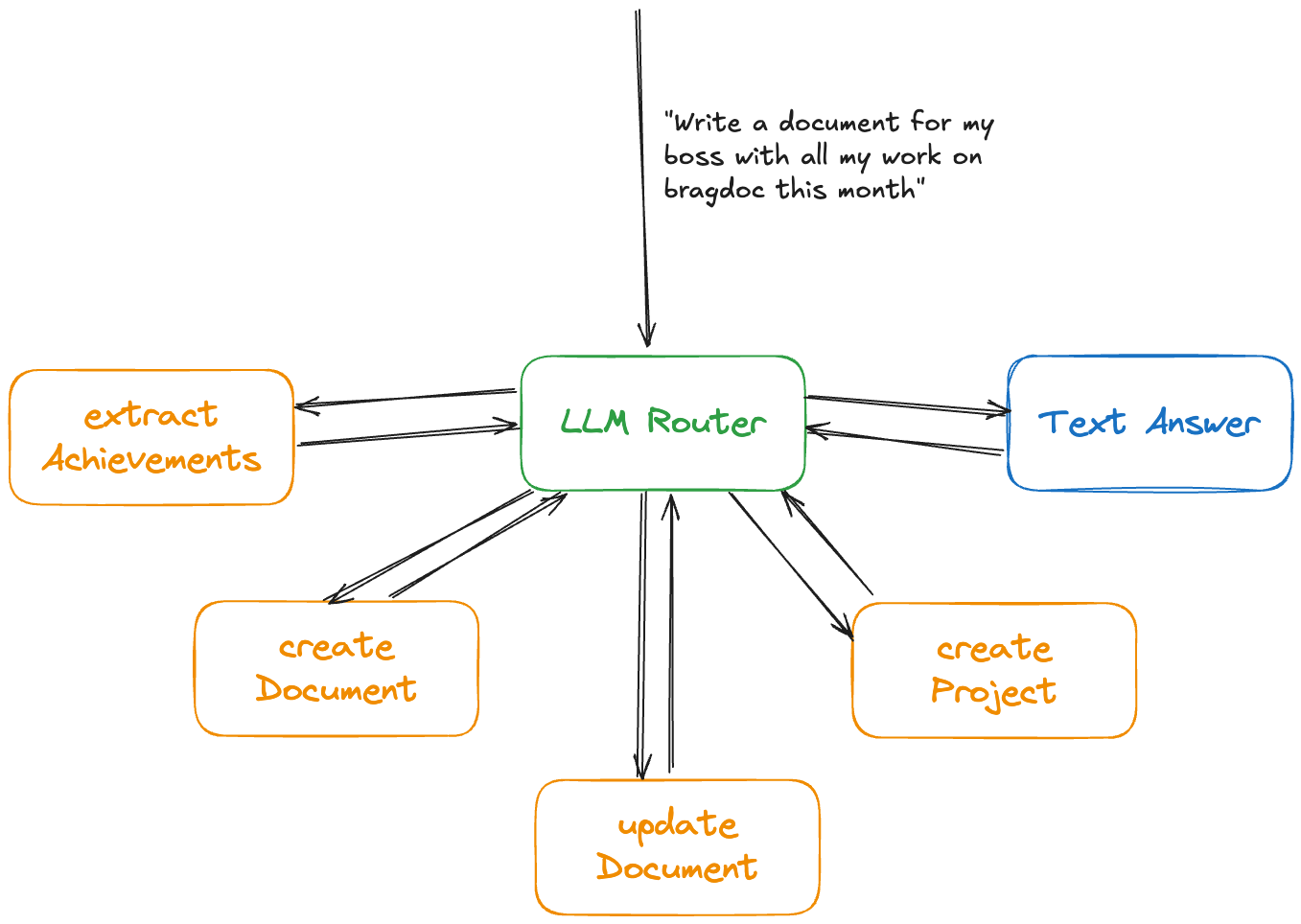

LLM Routers are a pivotal pattern in AI apps. They enable us to use one LLM to delegate to another, allowing us to create one prompt that's great at deciding what to do (the router), and a set of other prompts that are optimized for doing the actual operation the user wants. This separation allows us to more easily scale and test our AI applications, and this article shows how to build one with mdx-prompt and NextJS.

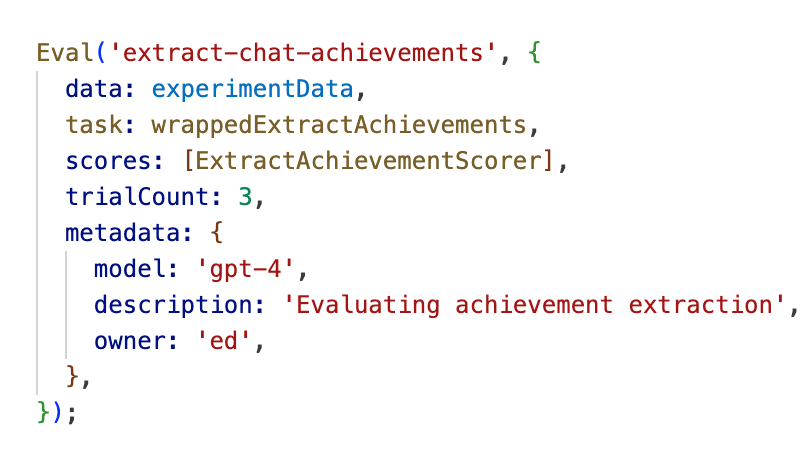

Evals are to LLM calls what unit tests are to functions. They're absolutely essential for ensuring that your LLM prompts work the way you think they do.

This article goes through a real-world example from the in-production open-source application bragdoc.ai, which extracts structured work achievement data from well-crafted LLM prompts. We'll go through how to design and build Evals that can accurately and efficiently test your LLM prompts.

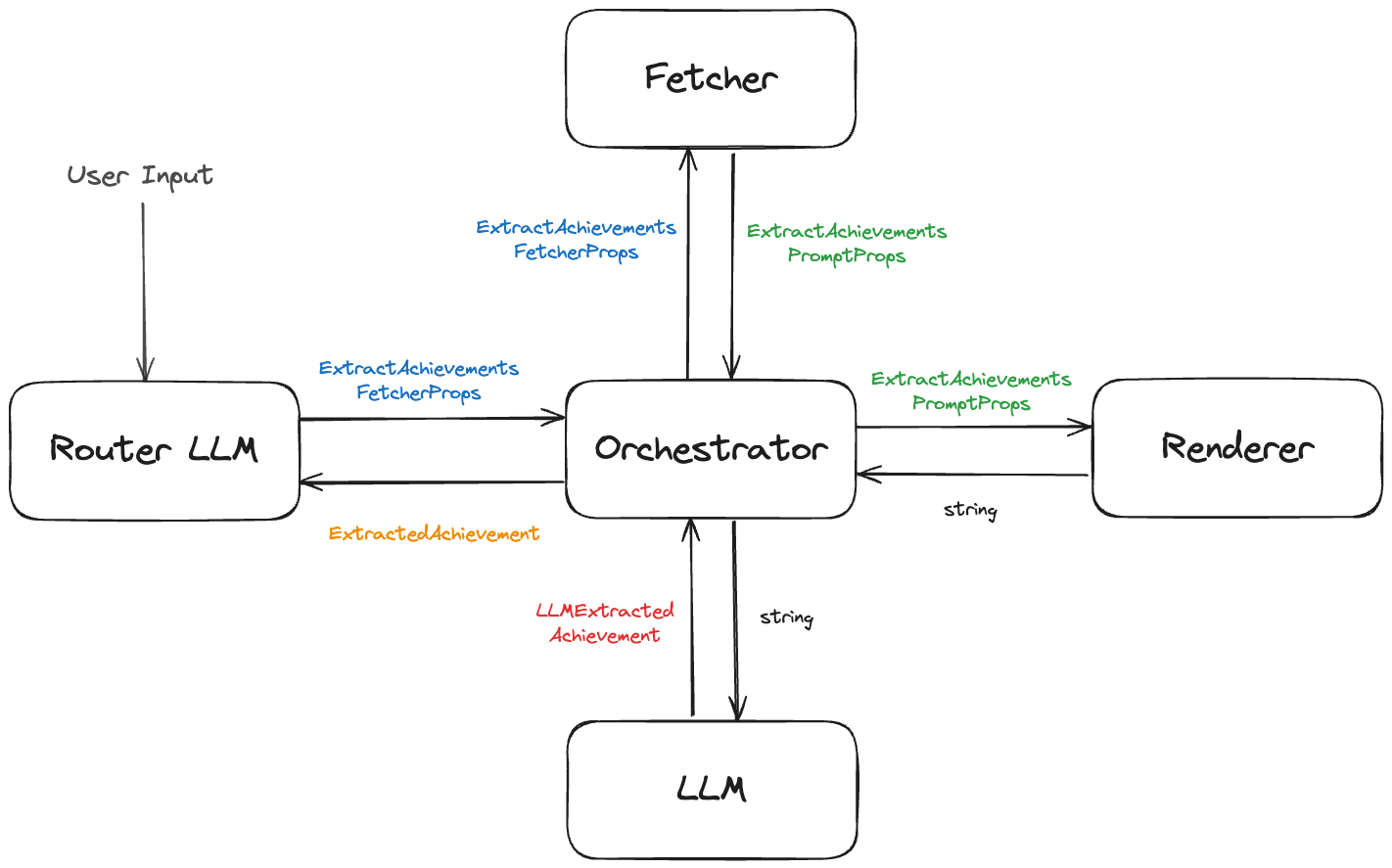

In this article we do a deep dive into how I'm using mdx-prompt for extracting work achievements from git commit messages in the Bragdoc app.

We go through the half a dozen different data formats we go through in order to extract well-structured data from a string prompt, and how we can test each part of the process in isolation.

LLMs use strings. React generates strings. We know React. Let's use React to render Prompts. This article introduces mdx-prompt, which is a library that lets you write your prompts in JSX, and then render them to a string. It's great for React applications and React developers.

In this article I break down how I built the core of bragdoc.ai in about 3 weeks over the Christmas/New Year break. The secret? AI tooling.

We'll go into details on how I used Windsurf, the Vercel AI Chat Template, Tailwind UI, and a bunch of other tools to get a product off the ground quickly.