Demystifying OpenAI Assistants - Runs, Threads, Messages, Files and Tools

As I mentioned in the previous post, OpenAI dropped a ton of functionality recently, with the shiny new Assistants API taking center stage. In this release, OpenAI introduced the concepts of Threads, Messages, Runs, Files and Tools - all higher-level concepts that make it a little easier to reason about long-running discussions involving multiple human and AI users.

Prior to this, most of what we did with OpenAI's API was call the chat completions API (setting all the non-text modalities aside for now), but to do so we had to keep passing all of the context of the conversation to OpenAI on each API call. This means persisting conversation state on our end, which is fine, but the Assistants API and related functionality makes it easier for developers to get started without reinventing the wheel.

OpenAI Assistants

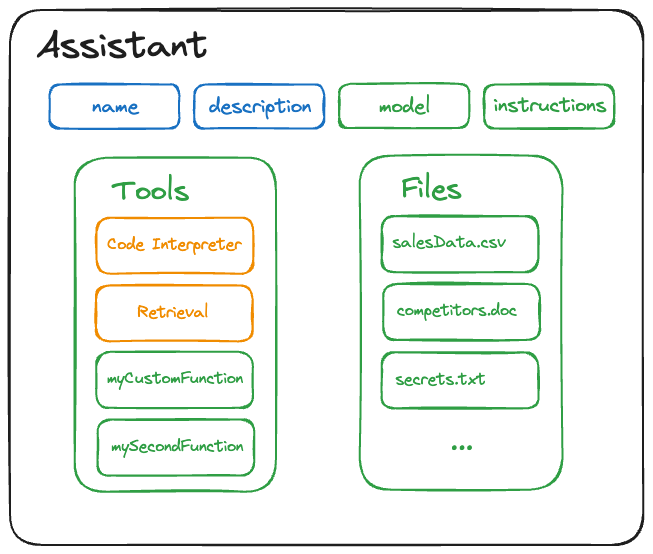

An OpenAI Assistant is defined as an entity with a name, description, instructions, default model, default tools and default files. It looks like this:

Let's break this down a little. The name and description are self-explanatory - you can change them later via the modify Assistant API, but they're otherwise static from Run to Run. The model and instructions fields should also be familiar to you, but in this case they act as defaults and can be easily overridden for a given Run, as we'll see in a moment.

Using ChatGPT to generate ChatGPT Assistants

OpenAI dropped a ton of cool stuff in their Dev Day presentations, including some updates to function calling. There are a few function-call-like things that currently exist within the Open AI ecosystem, so let's take a moment to disambiguate:

- Plugins: introduced in March 2023, allowed GPT to understand and call your HTTP APIs

- Actions: an evolution of Plugins, makes it easier but still calls your HTTP APIs

- Function Calling: Chat GPT understands your functions, tells you how to call them, but does not actually call them

It seems like Plugins are likely to be superseded by Actions, so we end up with 2 ways to have GPT call your functions - Actions for automatically calling HTTP APIs, Function Calling for indirectly calling anything else. We could call this Guided Invocation - despite the name it doesn't actually call the function, it just tells you how to.

That second category of calls is going to include anything that isn't an HTTP endpoint, so gives you a lot of flexibility to call internal APIs that never learned how to speak HTTP. Think legacy systems, private APIs that you don't want to expose to the internet, and other places where this can act as a highly adaptable glue.