Revisiting Bragdoc

About 9 months ago I launched bragdoc.ai, an AI tool that helps software engineers keep track of their work and turn it into useful documents for performance reviews, weekly updates, and resume sections. I wrote about how I built it in 3 weeks using AI tooling, shipped it, and then... let it sit there while I worked on other things.

But I came back to it recently and gave it a complete overhaul. The core idea remains the same - automatically track your meaningful contributions from git repos and turn them into documents - but pretty much everything else got rebuilt from the ground up.

What changed

The original version worked, but it had some issues. The UI was built around a chatbot interface because, well, that's what the Vercel Chat template gave me and I was moving fast. It worked fine but it always felt a bit clunky for what is fundamentally a data management and document generation problem.

Another issue was privacy. Bragdoc doesn't require you to link to github in any way - most employers wouldn't want some random third party app to have access to their code. Previously, the CLI would extract data from your git repos and send it up to bragdoc.ai's servers, where OpenAI would process it. That's fine for a lot of use cases, but if you're working on proprietary code at a company with strict data policies, it's not so great.

So I rebuilt it with three main goals:

Privacy first: The CLI now sends git data directly to the LLM of your choice, completely bypassing bragdoc.ai's servers. Your code stays on your machine. Always.

Configurable extraction: You get four levels of data extraction to choose from - commit messages only, diff stats, truncated diffs, or full diffs. Pick what makes sense for your privacy requirements and LLM budget.

Building an LLM Router with mdx-prompt and NextJS

A few weeks ago I released mdx-prompt, which makes it easy for React developers to create composable, reusable LLM prompts with JSX. Because most AI-heavy apps will use multiple different LLM prompts, and because those prompts often have a lot in common, it's useful to be able to componentize those common elements and reuse them across multiple prompts.

I've applied mdx-prompt pretty much across the board on Task Demon and Bragdoc, which has a dozen or so different LLM prompts at the moment. In a followup post I showed how I use mdx-prompt to build the prompt that extracts achievements from git commit messages for bragdoc.ai - allowing us to build a streaming, live-updating UI powered by a composable, reusable AI prompt.

This time we're going to look at the LLM Router that serves as the entrypoint for bragdoc.ai's chatbot. LLM Routers are a common pattern in AI apps, and they can make your users' interactions with your AI app enormously more empowering if you build them properly.

LLM Routers

bragdoc.ai basically does 2 things: extract work achievements from text, and generate documents based on those achievements. We can create highly tailored prompts and AI workflows for each case to make it more likely that our AI will do the right thing. But we also want to support a conversational AI-driven UI, which can achieve most of the things the user can via the UI directly, but with natural language.

That's pretty open-ended - how do we solve this? One powerful tool in our belt is the LLM Router, which is essentially a method where we ask an LLM what kind of message we're dealing with, and then route it to a second LLM call for processing. The first LLM call can be set up to be a general-purpose prompt that understands just enough about your application to be able to delegate to the right tool for the right job.

The secondary LLM calls can be a variety of highly specialized LLM calls or chains of LLM calls that are highly focused on achieving specific objectives. In the example of bragdoc.ai, one of these specialized prompts generates documents based on work achievements.

Introducing Task Demon: Vibe Coding with a Plan

In the last 6 months, the way that leading software engineers build software has undergone a fundamental shift.

The adoption of agentic AI coding assistants has heralded the greatest leap in productivity I have encountered in my 20 year career so far. As I wrote previously, adopting Windsurf doubled my output within a week. Where usually I'd be thrilled to find some way to get 20% more done, and would work hard for that 20%, suddenly I'm getting 100% and it's just... easy.

But if there's a single consistent counter-punch to the Vibe Coding movement, it's the irrefutable fact that no matter how good the agentic AI coding assistant is, it will always do much better work from a detailed prompt that includes a plan, than from your 2 sentence vibe code prompt.

That's what Task Demon does: it takes the 2 sentence vibe prompt and blows it up into a sublimely detailed prompt, usually anywhere between 200 and 1000 lines long, that includes a full implementation plan that will correctly guide the AI to do the right thing, using your project's structure, dependencies and ways of doing things.

A video is worth a million words. This one is 15 minutes but if you use AI to build software, I believe you'll find it worth it:

How it works

After using Windsurf and later Claude Code for a while, I found that using the following pattern yielded superb results:

Demystifying OpenAI Assistants - Runs, Threads, Messages, Files and Tools

As I mentioned in the previous post, OpenAI dropped a ton of functionality recently, with the shiny new Assistants API taking center stage. In this release, OpenAI introduced the concepts of Threads, Messages, Runs, Files and Tools - all higher-level concepts that make it a little easier to reason about long-running discussions involving multiple human and AI users.

Prior to this, most of what we did with OpenAI's API was call the chat completions API (setting all the non-text modalities aside for now), but to do so we had to keep passing all of the context of the conversation to OpenAI on each API call. This means persisting conversation state on our end, which is fine, but the Assistants API and related functionality makes it easier for developers to get started without reinventing the wheel.

OpenAI Assistants

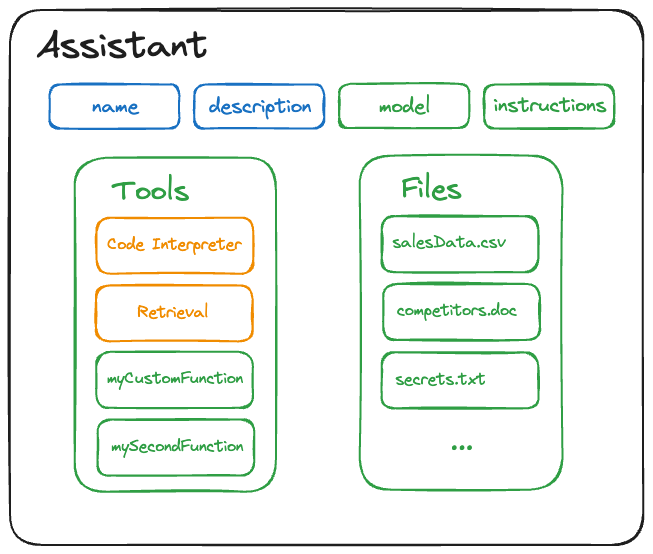

An OpenAI Assistant is defined as an entity with a name, description, instructions, default model, default tools and default files. It looks like this:

Let's break this down a little. The name and description are self-explanatory - you can change them later via the modify Assistant API, but they're otherwise static from Run to Run. The model and instructions fields should also be familiar to you, but in this case they act as defaults and can be easily overridden for a given Run, as we'll see in a moment.